How to Integrate Custom TLT Classification Model to NVIDIA® Jetson™ Modules?

WHAT YOU WILL LEARN?

1- How to generate engine Using TLT-Converter?

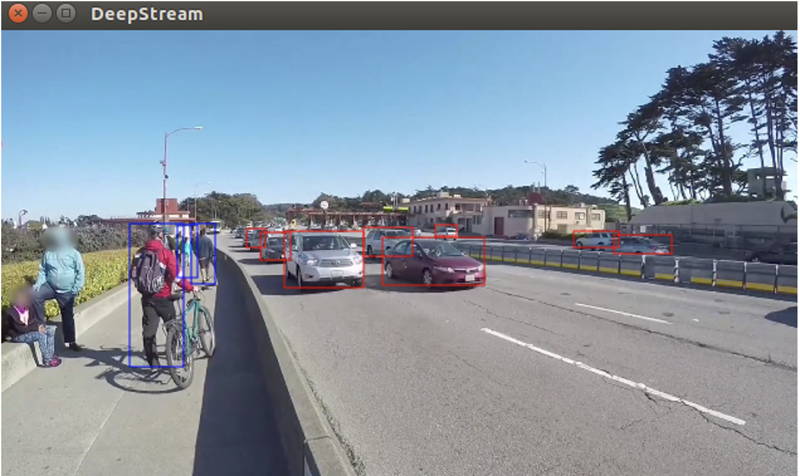

2- How to Deploy the Model in Deepstream?

ENVIRONMENT

Hardware: DSBOX-NX2

OS: Jetpack 4.5

In this blog post, we will show you how to integrate custom classification model into Jetson™ modules using NVIDIA® Transfer Learning Toolkit with Deepstream.

Before we get started, you will need to download tlt-converter to generate an engine and ds-tlt-classification to test the model.

To install tlt-converter go to the following page, download and unzip the zip file for Jetpack 4.5:

https://docs.nvidia.com/metropolis/TLT/tlt-user-guide/text/image_classification.html

You need to install OpenSSL package as well.

sudo apt-get install libssl-dev

Export the following environment variables.

export TRT_LIB_PATH=”/usr/lib/aarch64-linux-gnu”

export TRT_INC_PATH=”/usr/include/aarch64-linux-gnu”

To install ds-tlt , visit the link below and download deepstream-tlt-apps according to the instructions. You do not need to download the models since we will be using our own model.

https://github.com/NVIDIA-AI-IOT/deepstream_tlt_apps#2-build-sample-application

How to Generate Engine Using TLT-Converter?

Make sure you have exported models that you previously trained.

Now, we will generate an engine using tlt-converter. You can find the usage of tlt-converter by running the following command.

./tlt-converter –h

We will show the steps for f16 model but it can be used in both f16 and f32.

cd classification_exported_models/fp16

home/nvidia/tlt-converter -k forecr -d 3,224,224 classification_model_fp16.etlt -e fp16.engine -t fp16 -o predictions/Softmax

How to Deploy the Model in Deepstream?

Finally, you can run ds-tlt-classification to test the model. Supported formats for the test input are jpeg and h264. You can use the sample videos and pictures as well.

cd /opt/nvidia/deepstream/deepstream5.0

deepstream-app -c samples/configs/deepstream-app/source4_1080p_dec_infer-resnet_tracker_sgie_tiled_display_int8.txt

Thank you for reading our blog post.