How to run DeepSeek R1 AI Model on DSBOARD-ORNX Locally

WHAT YOU WILL LEARN?

1-Install CUDA

2-Install Ollama

3-Install Open-Webui

4-Open The Website

5-Videos About The Process

Hardware: DSBOARD-ORNX

OS: JetPack 6x

How to run DeepSeek R1 AI Model on Dsboard ORNX Locally

In this tutorial we will learn how to install the deepseek r1 model via ollama and run this model through an AI interface.

Install CUDA

Before install an AI. We need to install CUDA SDK components via Nvidia SDK Manager. When installation is finished, we could check using nvcc command.

$ nvcc --version

Install Ollama

Click the download button and select Linux than copy the code and paste the terminal.

We visit Deepseek Ollama page then select a model. This tutorial we select 14b model.

Copy the code and paste the terminal again.

$ ollama run deepseek-r1:14b

When finished, the terminal-based chat is appeared you can type /bye command and close the chat.

Note! If you don’t want to use web-based AI chat interface, only you can type ollama run deepseek-r1:14b command and use instantly. Didn’t need to install docker and open-webui.

Install Docker

We have to install docker because of AI chat Open-Webui runs on docker.

$ sudo apt update

$ sudo apt install -y nvidia-container

$ curl https://get.docker.com | sh && sudo systemctl --now enabledocker

$ sudo nvidia-ctk runtime configure --runtime=docker

Add your user to the docker group, so that you don't need to use the command with sudo

Test docker with hello world container.

Docker installation has been finished succesfully.

Install Open-Webui

We install open-webui docker port. So open the terminal and paste below command.

$ docker run -d --network=host -v ${HOME}/open-webui:/app/backend/data -e OLLAMA_BASE_URL=http://127.0.0.1:11434--name open-webui --restart always ghcr.io/open-webui/open-webui:main

Run below command if you see healthy status, The local server is started succesfully.

$ docker ps

Open The Website

1- Use Personal Computer.

open your browser (Firefox, Chrome etc.) on your computer and type

http://JETSON_IP:8080

2- Use DSBOARD-ORNX

open your browser (Firefox, Chrome etc.) on your dsboard-ornx and type

http://localhost:8080

Click get started and create an admin account.

All models which installed via Ollama is appeared on website.

Enjoy your particular AI.

Videos About The Process

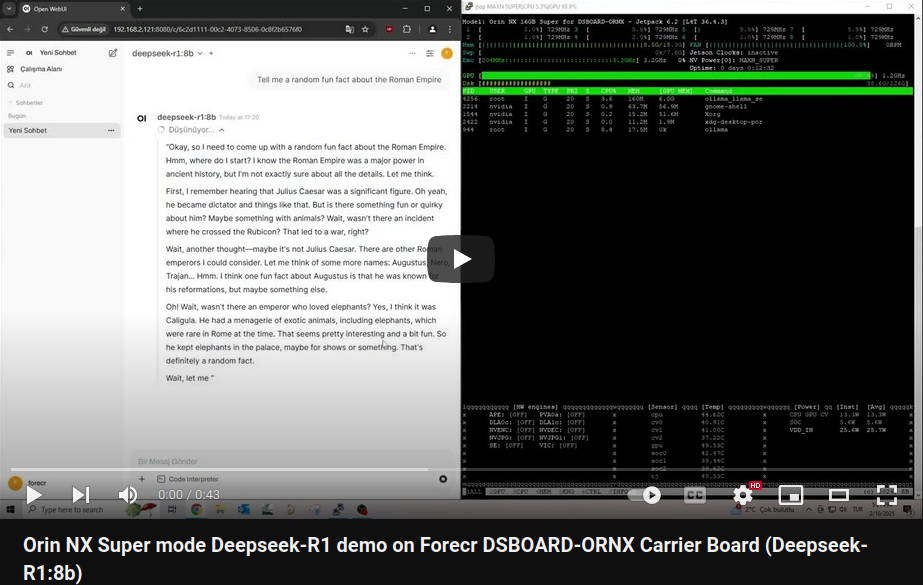

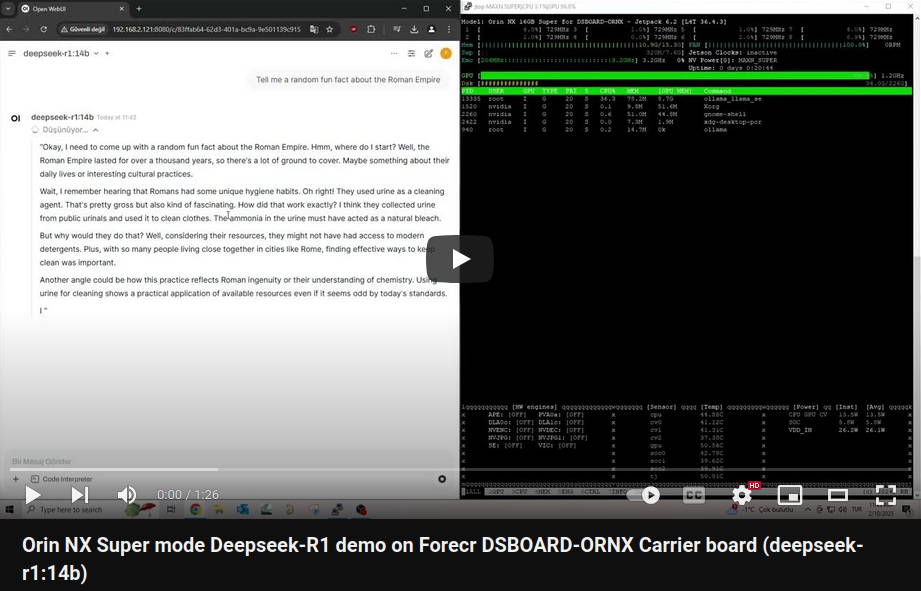

Orin NX Super mode Deepseek-R1 demo on Forecr DSBOARD-ORNX Carrier Board (Deepseek-R1:8b)

For more information on carrier boards designed to support Jetson Orin NX and other Jetson modules, check out our collection. These boards are built for edge AI deployments, offering robust connectivity and performance in industrial environments.

This tutorial is based on the DSBOARD-ORNX, a rugged and compact carrier board engineered specifically for Jetson Orin NX.

With support for NVMe, USB, UART, and display interfaces, it’s ideal for running LLMs like DeepSeek R1 at the edge — fully local and cloud-free. Optimized for use cases like autonomous systems, robotics, and AI inference at the edge.

Thank you for reading our blog post.