How to Train a Custom Semantic Segmentation Dataset using NVIDIA® Transfer Learning Toolkit?

WHAT YOU WILL LEARN?

1- How to configure the dataset?

2- How to train and prune the model?

3- How to retrain the pruned model?

How to export the model?

ENVIRONMENT

Hardware: Corsair Gaming Desktop PC

OS: Ubuntu 18.04.5 LTS

GPU: GeForce RTX 2060 GPU (6 GB)

Configure The Dataset

In this blog post, we will train a custom semantic segmentation dataset with UNet. First, we will download and configure the dataset. Then, we will train and prune the model. Finally, we will retrain the pruned model and export it.

The requirements are wrote in Transfer Learning Toolkit’s (TLT) Quick Start page (In this guide, we won’t use NGC docker registry and API key).

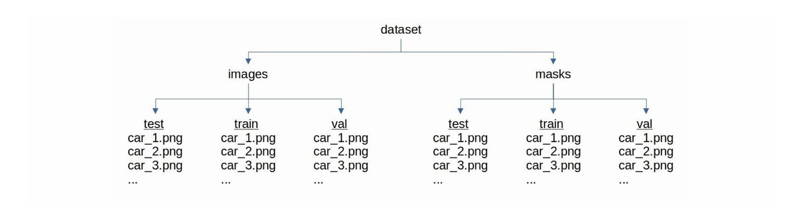

To begin with, create “~/tlt_experiments” folder and move the extracted files into it. This created folder will use as a mutual folder between Docker and Host PC. Then, download the car dataset from here. Configure the dataset folder like below. The dataset detailed from here.

In our dataset we renamed all files in train folder and copy 30 pictures to test and val folders.

Now, download the archived training file to the download part of this post. Start into the Docker container, change the current directory to “tlt_experiments” and download the ResNet-18 model for training with these terminal commands:

docker run --gpus all -it -v ~/tlt-experiments:/workspace/tlt-experiments nvcr.io/nvidia/tlt-streamanalytics:v3.0-py3

cd /workspace/tlt-experiments/

wget https://api.ngc.nvidia.com/v2/models/nvidia/tlt_semantic_segmentation/versions/resnet18/files/resnet_18.hdf5

Training and Pruning the Model

Now, we can train our dataset (This process may take a while).

unet train -e config.txt -r /workspace/tlt-experiments/experiment_output/ -k forecr -m resnet_18.hdf5

ls -lh experiment_output/weights/

Prune the model to get more stable results with these terminal commands:

unet prune -m experiment_output/weights/model.tlt -e config.txt -o /workspace/tlt-experiments/experiment_output/weights/model_pruned.tlt -k forecr

ls -lh experiment_output/weights/

Retraining the Pruned Model

NVIDIA recommends that you retrain this pruned model over the same dataset to regain the accuracy. Retrain the pruned model and evaluate it.

unet train -e config.txt -r /workspace/tlt-experiments/experiment_output_retrain/ -k forecr -m /workspace/tlt-experiments/experiment_output/weights/model_pruned.tlt

ls -lh experiment_output_retrain/weights/

mkdir /workspace/tlt-experiments/experiment_output_result

touch experiment_output_result/log.txt

unet evaluate -e config.txt -m /workspace/tlt-experiments/experiment_output_retrain/weights/model.tlt -o /workspace/tlt-experiments/experiment_output_result/ -k forecr

The final model located at (in Docker) :

“/workspace/tlt-experiments/experiment_output_retrain/weights/model.tlt”

and at (in Host PC) :

“~/tlt-experiments/experiment_output_retrain/weights/model.tlt”

Exporting the Model

Export the model as “.etlt” file. We exported each type of models (FP16, FP32, INT8) with these commands:

unet export -e config.txt -m /workspace/tlt-experiments/experiment_output_retrain/weights/model.tlt -k forecr -o exported_models/fp16/segmentation_model_fp16.etlt --data_type fp16 --gen_ds_config

unet export -e config.txt -m /workspace/tlt-experiments/experiment_output_retrain/weights/model.tlt -k forecr -o exported_models/fp32/segmentation_model_fp32.etlt --data_type fp32 --gen_ds_config

unet export -e config.txt -m /workspace/tlt-experiments/experiment_output_retrain/weights/model.tlt -k forecr -o exported_models/int8/segmentation_model_int8.etlt --data_type int8 --gen_ds_config --cal_cache_file exported_models/int8/cal.bin --cal_image_dir /workspace/tlt-experiments/dataset/images/train --cal_data_file exported_models/int8/calibration.tensor

Thank you for reading our blog post.